This is a sort-of transcript of a talk I gave a couple of times last year. If you’d prefer you can watch one of the recordings:

Alternatively you can read through the presentation with my notes here.

This blog post goes into more detail, as I don’t have to constrain myself to a twenty minute slot. However, I know lots of people prefer to have a video or audio transcript these days that they can watch at a higher speed, or even skip through, so the recordings/presentation are provided above.

Even though the talk could have been a short five minute “lightning talk” I intentionally made it longer to build up to the final point. And part of building up to that is to highlight some of the contradictions and complexities in this space. Also nuance. Nuance is difficult to get across in text, however, but I do think I’m improving at that. The recordings may be better in that aspect.

I do think that nuance is being lost in the race to automate, summarise, and shorten everything, which is a shame as nuance is part of what makes things interesting and more, well, relatable. Stripping that all out, removing the voice of the author and their idiom? It really shouldn’t be happening in many places. It reminds me of the opening of Bradbury’s novel:

“It was a pleasure to burn. It was a special pleasure to see things eaten, to see things blackened and changed.”

That’s what I sometimes feel is happening at the moment.

Sometimes it’s not even nuance, it’s stuff missing the mark completely. Even this tiny little slice of the web isn’t immune from it. I posted a couple of things recently that got a bit of traction on Hacker News and inevitably they got reposted elsewhere.

In one particular case the repost is clearly some (fake?) person’s LinkedIn bot scraping the front page of Hacker News and summarising the links with AI very poorly, not understanding the content of the posts and seemingly just going by the titles, and then highlighting them as noise in their feed as if they had written it themselves:

The link was pointing to this post, and to confirm that:

Arthur Howell needs to change their last name to Dent, because clearly their never-ending feed here is just screaming “COMPUTER DO SOMETHING!”.

And it’s not “our” post, it’s my post you absolute gobshite.

Think that’s the only one? Of course not:

That’s from “Data For Science, Inc”, briefing #281. This one is so unbelievably meta-bad I don’t think I even need to say much more about it. If you’re putting this stuff out into the world you have a responsibility to: a) proof read the thing you’re putting out into the world, and b) read and understand the things your thing is referencing then repeat step a to apply corrections.

If you’re not doing that then you are contributing to the ever growing pile of garbage that is the internet, and like an enthusiastic puppy the LLMs will continue to gobble up that crap without restraint. Everyone is a publisher these days, and it seems most are really bad at basic editing.

Anyway.

Musings on Generative AI

Given this is a post about generative AI, and more specifically photography, I decided to have a “hero image”. I gave DALL-E a slightly absurd prompt to see what it would come up with: “A pickup truck, battered and dented, has crashed into the side of a dilapidated building. Overgrowth of prickly pear cactus envelops the truck, their spiky pads and yellow flowers contrasting starkly against the metal frame and cracked windows. The sky above is a clear, vivid blue, offering a stark contrast to the chaos below.”

The result? Not bad:

I should say that “not bad” is from the point of view of being a software engineer. Impressive almost, even though DALL-E has ignored some of my prompting. From the point of view of a photographer? It’s terrible. If you showed me this I’d think you were taking the piss.

But, the way you get good at something is to start at being not good at that thing. Then spend the time to get good. So let’s cut DALL-E some slack.

You could argue this is a contrived example, and that’s fair. My Instagram explore feed is full of posts that are created by generative AI, and on a technical level they are very impressive. On a “photographic” level they are bad. I will get to why.

Some Quotes To Start

- “Today everything exists to end in a photograph”

- Susan Sontag (1977)

Sontag wrote a series of essays in the 60s and 70s that were compiled into a book called “On Photography”, think of the book as the Brooks of photography essays. A collection of thoughts on the state of a thing. I haven’t read it in a long time, but this is the book’s canonical quote. It was a prescient thought, long before digital imagery and phones with cameras.

Sontag was the partner of Annie Leibovitz, who started her career shooting for Rolling Stone magazine. She then went on to shoot for glossy fashion and lifestyle magazines. Her later work attracted some controversy and hasn’t aged well. Some of which now looks overly processed, manipulated, and staged. Photos that look like they were made with generative AI.

- “When you put four edges around some facts, you change those facts.”

- Garry Winogrand (1928-1984)

Winogrand was the most prolific street photographer of all time, probably. Some of his photographs are remarkable. It’s the kind of work that you don’t really get until you’ve spent time looking at lots of photography, and trying to build your own body of work.

Winogrand was also a good example of the numbers game that a lot of photography is. Especially in street photography. You have to throw a lot of shit at the wall to ensure some of it sticks, and Winogrand threw an awful lot. At the time of his death he had over a quarter of a million photographs unprocessed1

Winogrand also said “You have a lifetime to learn technique. But I can teach you what is more important than technique, how to see; learn that and all you have to do afterwards is press the shutter.”

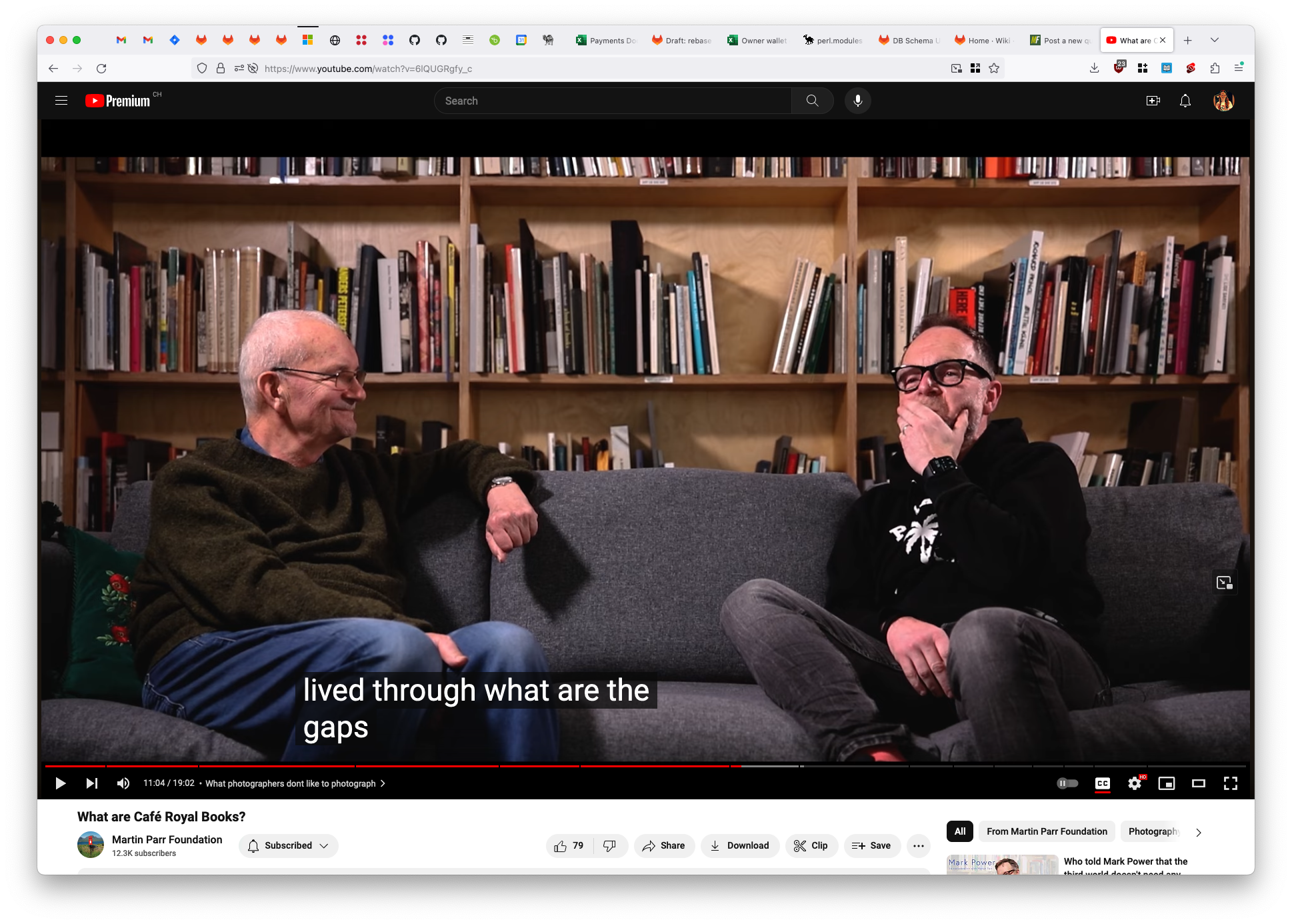

- “What are the gaps?”

- Martin Parr, 2024

Parr caused a bit of a stir when his colour work first got published in the early 1980s. His work was dismissed as vernacular, “snap shots”, and his attempt to join Magnum led to outcry from other members. The existing collective of war photographers and photojournalists was not interested in his work, and couldn’t understand how his approach had any value.

Parr scraped in by a single vote. He ended up as president of the Magnum photo agency for a few years recently.

Hype Hype Hype!

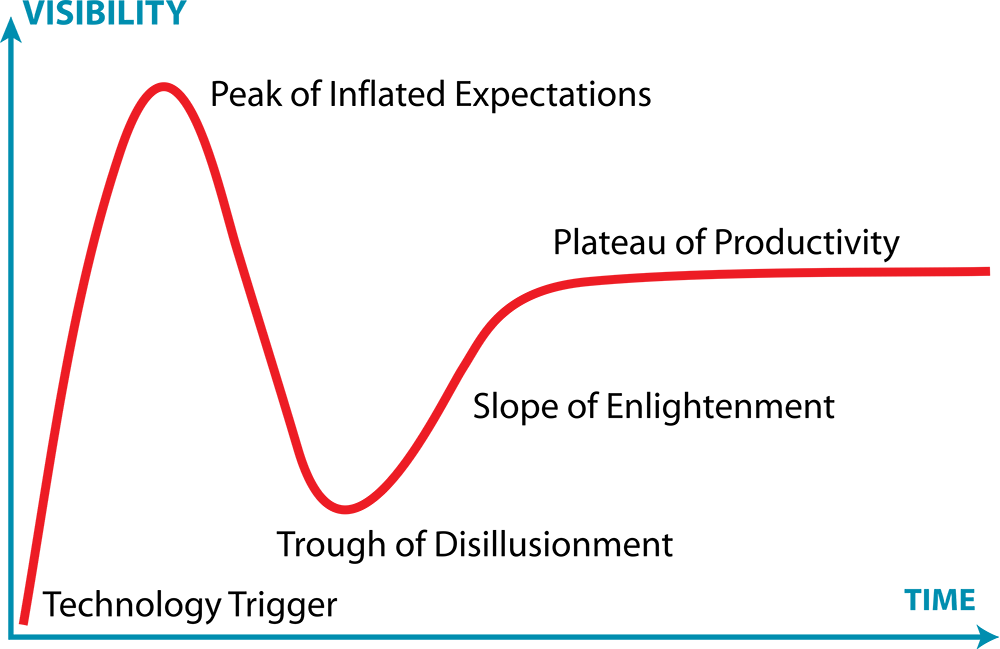

If you’re of a certain generation you’ll probably recall a lot of the following.

- Deep Blue - When IBM beat Kasparov in 1997 and we all thought we were doomed and nobody would ever play chess again? Chess remains pretty popular.

- IBM Watson - “Natural language” stuff, it won Jeopardy in 2011

- Siri - Also 2011, don’t think it was ever a contestant on Jeopardy though

- Big Data - Meh, CERN have been doing that for decades.

- Crypto currencies - The less said the better at this point?

- Blockchain - Actually has real world applications outside the hype.

- NFTs - Let’s pretend these never existed (is that too meta?)

- Virtual Reality - It’s back! Augmented reality at this point though, not virtual reality.

- Chat assistants - Incredibly annoying for power users

- Micropayments - Never got anywhere, blame Visa/MasterCard?

- The Semantic Web - Was the next big thing when I was doing my Masters, in 2003.

- XML - Still everywhere, and even growing, but JSON/YAML/TOML kind of replaced it in many places.

- Rust - The language that’s going to solve all of our problems!

And now Generative AI and LLMs. Isn’t this just MapReduce on steroids?

Of course they’re all examples of the above, the Gartner Hype Cycle. Most of them have found real world useful applications, but some of them are still stuck in the trough.

With generative AI it feels like we’re currently atop the Everest of peaks. That peak is so far up it’s smashing out of your screen and poking you in the eye. How the hell do we get back down from this? Generative AI is being shoehorned into everything. Often poorly.

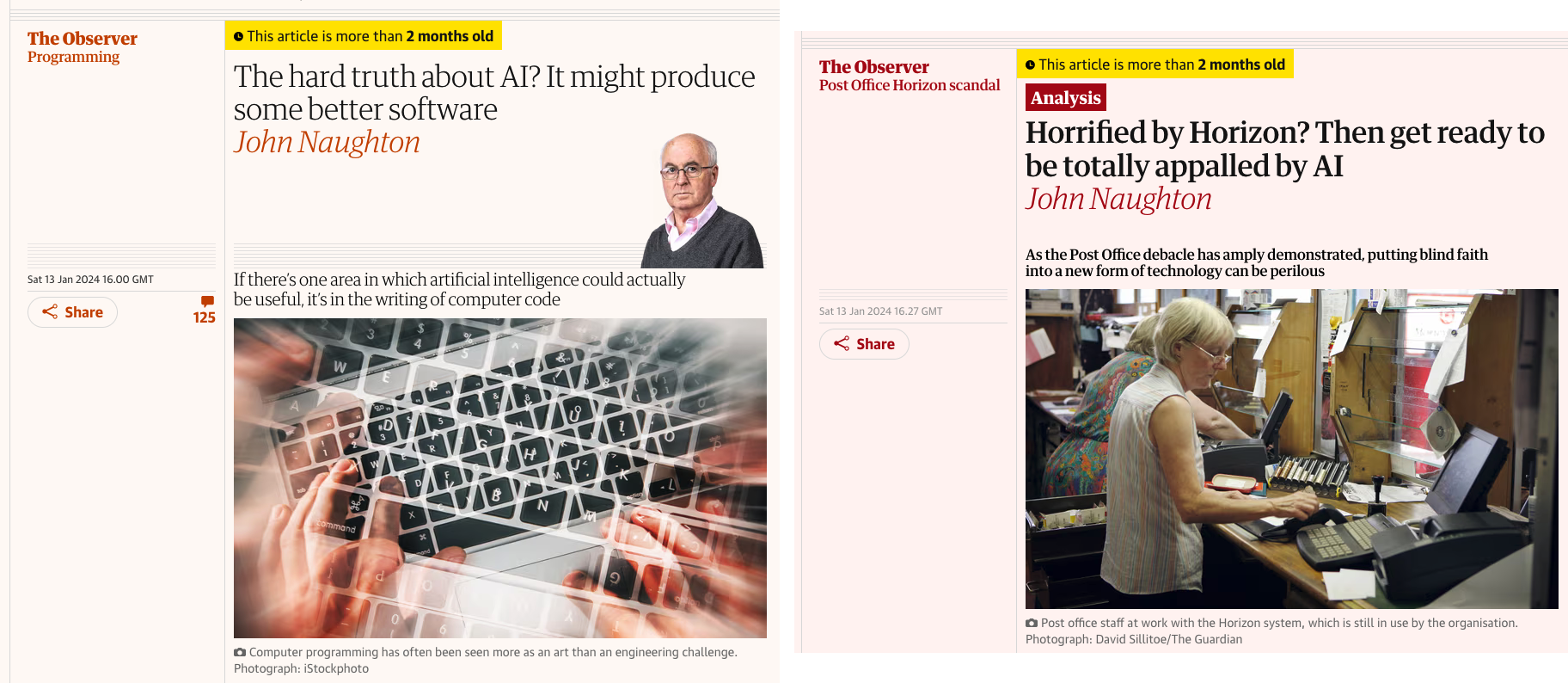

I’ve been collecting examples of these the last year or so. I figured I’d go through some of them to evaluate their effectiveness. The collection includes editorials and the news cycle, like the article on the left below - a relatively fair one from the beginning of 2024.

“The hard truth about AI? It might produce some better software” - That’s not a hard truth, it’s a truth. Of course, the compulsory pointless stock photo image is included, this is exactly what programming looks like, smashing at the keyboard.

Then an article from the same writer, on the right, published 27 minutes later: “Horrified by Horizon? Then get ready to be totally appalled by AI”. It’s like the editor asked the author for an opinion piece on the Horizon scandal and requested AI be shoehorned into it.

These two articles aren’t actually at odds with one another, but them being published 27 minutes apart was just, well, bizarre

For those not aware - the Horizon scandal involved the British Post Office pursuing thousands of innocent subpostmasters for apparent financial shortfalls caused by faults in Horizon, an accounting software system developed by Fujitsu.

Between 1999 and 2015, more than 900 subpostmasters were convicted of theft, fraud and false accounting based on faulty Horizon data, with about 700 of these prosecutions carried out by the Post Office.

Families were destroyed, innocent people were jailed, and several people took their own lives.

It was a colossal fuckup that was denied and covered up for years, and it’s only in the last twelve months that the wrongs have started to be corrected. This will include over one billion pounds of compensation.

This had absolutely nothing to do with AI.

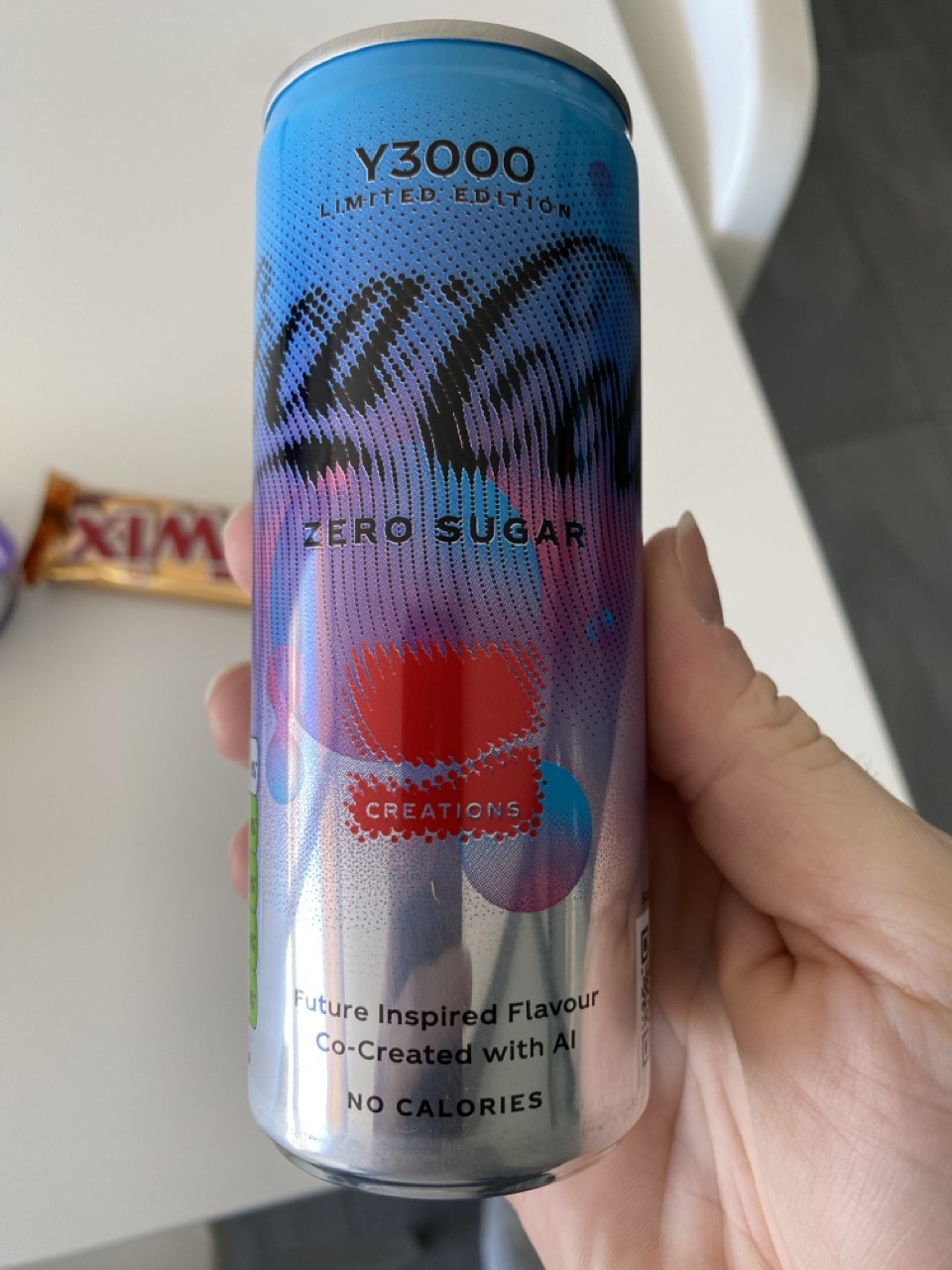

Minimum Viable Product

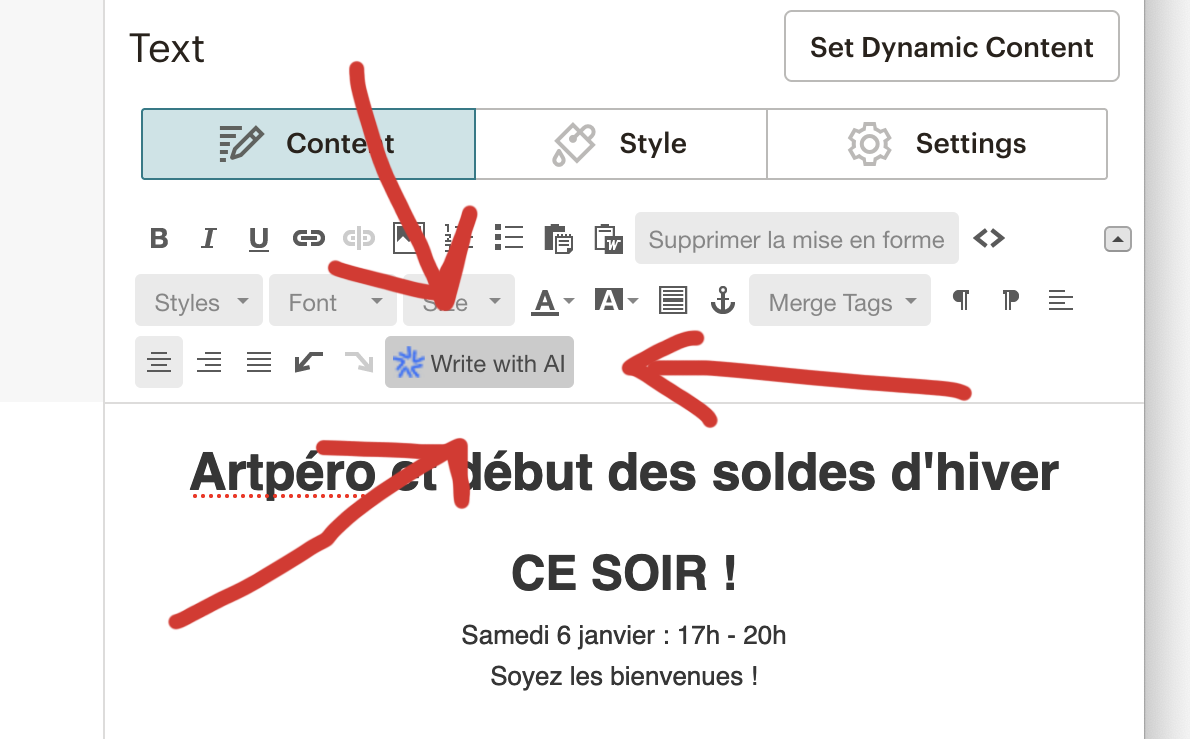

Of course, functionality that leverages AI is being added to many a product. We use Mailchimp to send out notifications of events at the Gallery, and they added AI tools recently. Generate your email content with AI:

When I saw this I thought, well ok have a go then. It generated the following:

Hey there! Warm up this evening with our apéro and start-of-winter sale from 5pm to 8pm. Come snag some hot deals, sip wine, and enjoy the cozy vibes. It’s the perfect way to kick off the chilly season. See you there!

I mean, it’s alright. Maybe a bit too colloquial, or at least lacking the idiom I usually write these in. And maybe too wordy? People don’t read emails. According to the analytics about 50% of the people we send these emails to open them, and that is exceptionally high for our sector.

Mailchimp will have stats on this, but surely keep it short and to the point?

Oh yeah, and it needs to be in French. As evidenced by the existing copy I had already put into the text box.

So I said “write it in French”.

And Mailchimp replied “Oops! We had issues handling your request”.

So I repeated myself, “il faut écrire le texte en francais”:

“We don’t support that language.” Minimum viable half-assed product. This is literally a couple of API calls to handle. Either translate the request, or translate the response.

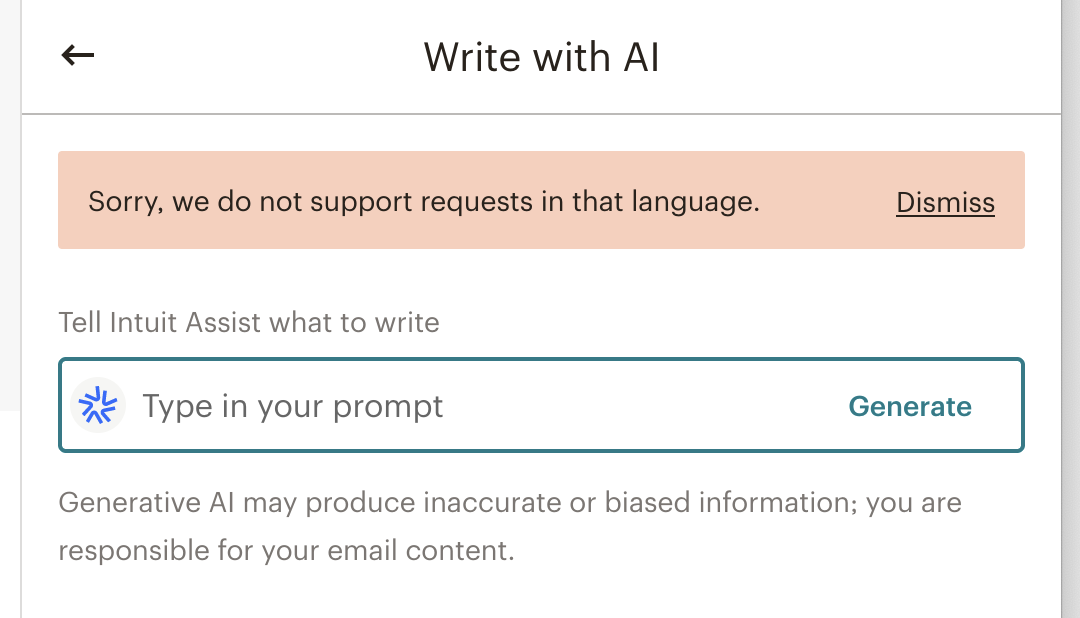

How about this:

That’s right: A Stearman Press SP-445 Film Development Tank. It’s pretty niche.

I was selling a lot of film kit and the end of last year, including this, so listed it on eBay with the following description:

As title. I’m sure you know what this is. I have put 400 frames through one of these and it continues to work flawlessly. Light tight and easy to use. The best system for developing 4x5 film at home.

The other I bought as a backup but haven’t used. They also come with instructions and a spare set of the original holders.

eBay suggested I let AI draft it for me:

So I said, why not? It generated the following:

Introducing the Stearman Press SP-445 Film Processing System with two extra holders for your convenience. This developing kit is compatible with various film types including 4x5, black and white, color, and colour. The tanks and drums are designed to provide efficient and high-quality film processing, making it a great addition to your darkroom and developing equipment. This package includes two spare holders, making it easier for you to process multiple films at once. The Stearman Photo brand ensures that you are getting a reliable and durable product for your photography needs. With its sleek black color, this kit will not only be functional but also aesthetically pleasing. Upgrade your film processing experience with the Stearman Press SP-445 Film Processing System.

This is like Reddit comment level word salad. Use some paragraphs for Christ’s sake.

It’s wordy marketing bollocks. Anyone looking at this item on eBay would already know all this anyway. Nobody is stumbling across this item and thinking “oh yes, what I really need in my life is a 4x5 film developing tank”

The AI is pulling from existing blurbs and reviews about this, but the review space is shallow because it’s a niche product. It can’t tell you what it’s actually like to use the product for real because it cannot and will not ever use it.

It tells us nothing new here, it’s lacking any informative insight. And it’s wrong. It’s available in “color and colour”? The spare holders don’t allow you to process multiple films at once, that’s not how this works.

Oh, and it’s aesthetically pleasing? No darkroom equipment is ever aesthetically pleasing, it is purely functional - it has to work in the dark.

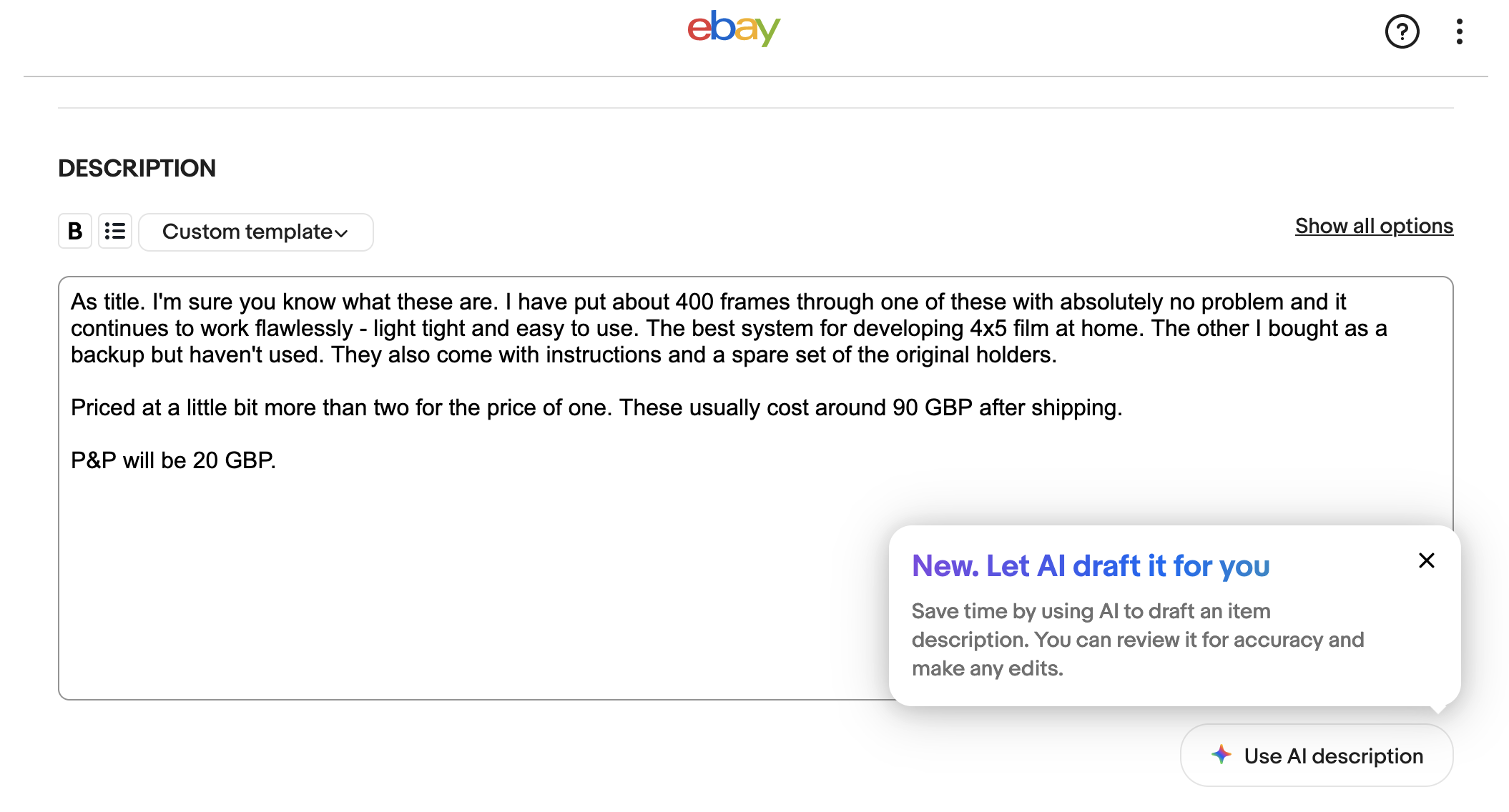

How about this:

“Future Inspired Flavour Co-Created With AI”… yum 😋 Is this the “New New Coke”?

What Do You Think About All This “AI” Stuff?

I get asked this relatively frequently, as I’m sure you do. I’m a software engineer so I must have some compelling insight on it, right?

I mean, it’s alright I suppose, but really it’s like this:

The burden is shifting to curation and review. I feel like I have to proof read and review enough stuff already. Half my day or more is proof reading and review: code reviews, tickets, documentation, support requests, emails, commit messages.

And sure, I could chuck AI at it but I’d still have to proof read and review the output. If you’re not doing that then you’re failing basic due diligence.

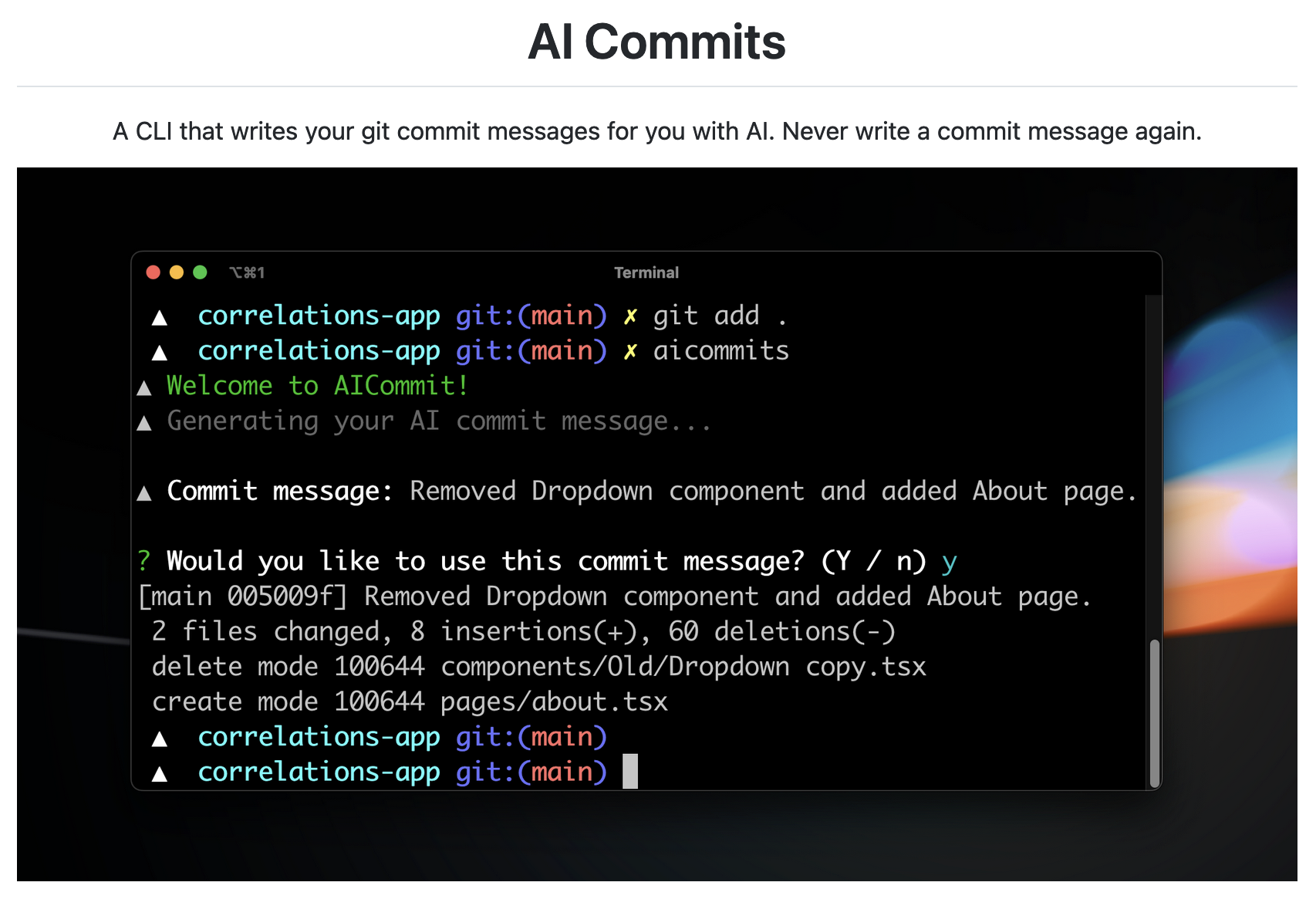

It feels like we’re at this inevitable end state:

“AI commits. A CLI that writes your git commit message for you with AI. Never write a commit message again”.

7,300 stars on GitHub. The repo itself has an average commit message length of 120 bytes. That includes the Date and Author headers, so they’re obviously dogfooding this.

If you use a tool like this you fail at software engineering and I hate you.

Manipulation and Lies

But anyway: photography. Should we be concerned about “AI photography”, or generative AI in photography? How is generative AI going to change it? What about the criticisms and dismissals of it not being real photography? What exactly is real photography? I’d like to suggest that “real”, or “pure” photography is idealism, and often naive.

Also, this generative AI stuff has existed in photography for much longer than the last couple of years. Remember this one:

Refresh the page and you’ll see a new face every single time. This is from thispersondoesnotexist.com, which appeared in early 2019. A site that shows faces generated by AI.

But I’d like to suggest that the person you’re staring at above probably does exist. The odds are good with eight billion people on the planet. Refresh the page - person probably exists? Refresh - person probably exists? What does it mean to say “this person does not exist”?

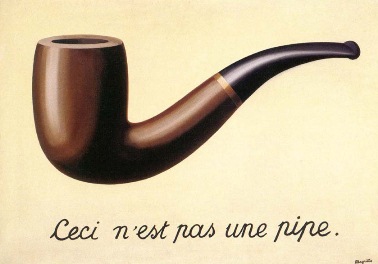

René Magritte said it in 1929:

- The word is not the thing

- The map is not the territory

- The portrait is not the person

All photography is a lie, or at the least a partial truth - “When you put four edges around some facts, you change those facts.” The early 2020’s will go down as an epochal period when most people learned to distrust photos. They were only about a century late to this realisation.

Photos can manipulate the viewer’s perception and emotions. They can be doctored, staged, or even fabricated entirely. Photos can also be misleading due to their selective nature and the way they are presented. Sometimes this is not the intention. Take the following recent example:

This was a really sad situation, given the reason we hadn’t seen Kate for a while was because she was undergoing cancer treatment, and inevitably the photo was going to be torn apart the moment it was released; examined at a 1000% magnification in Photoshop by technicalists ready to pounce on any misplaced pixel. We trust nothing that might be PR, but we trust everything else?

“Photography Is No Longer Evidence of Anything” wrote WIRED in response to this situation, but that has been the case for a very long time as they admit in the article.

What we need to ask is if is it surprising that the most PR controlled and manipulated family on earth is going to also have their photographs manipulated?

No. Not at all.

Should we care?

No. Not at all.

Because every single photo you see or take is manipulated in some way. It’s practically impossible to take a photo with most devices (like your phone) without computational photography being involved. Sometimes it’s astonishingly good, and sometimes it’s astonishingly bad.

The important consideration is intentions. I don’t think there was any bad intentions at play in the above photo, just a thousand tiny bad editing decisions either by a human or a computer. Like the Leibovitz photos I mentioned earlier.

Going back, before computational photography - your camera or film stock would inform your approach. Your framing decisions. Your darkroom technique. Your lens choice.

But you know what the above photo kind of reminds me of?

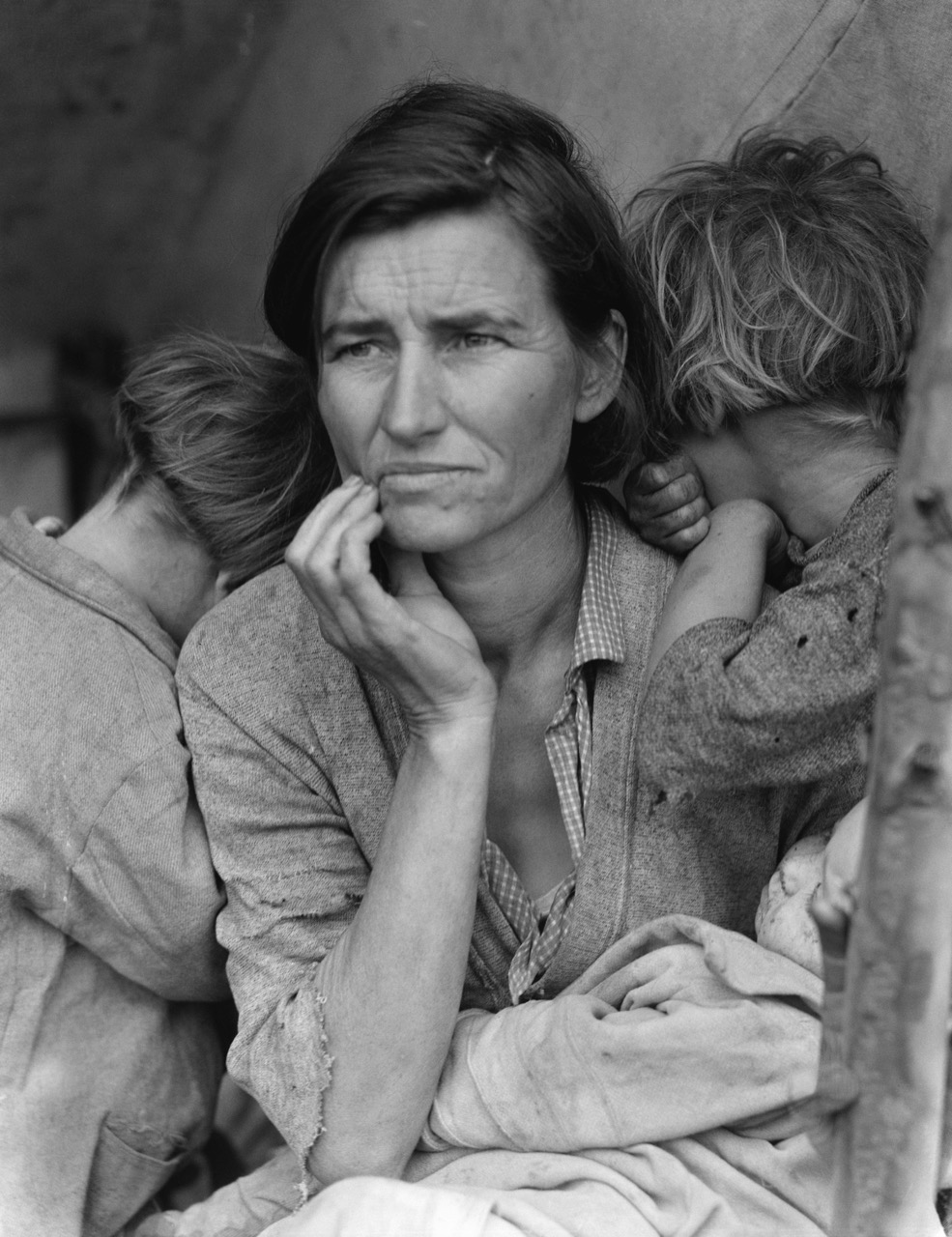

Going back almost 90 years - “Migrant Mother” 1936, by Dorothea Lange. The photo came to symbolize the hunger, poverty and hopelessness endured by so many Americans during the Great Depression.

Notice anything odd in the bottom right hand corner? Probably not at this scale so let’s zoom in:

While the image was being prepared for exhibit in 1938, the negative of the photo was retouched to remove a thumb from the lower-right corner of the image.

Is this any different to the Kate photo?

However the more interesting thing is the intentions of the photo and its story about the subject were misleading. The woman in the photo wasn’t actually a migrant, and was a Native American. A reasonably well off one it turned out, she was sat waiting at a migrant camp as her car had broken down and was waiting for her husband to return with the repair material.

Intentions matter.

Ansel Adams: Yosemite Valley, Yosemite National Park, 1934. Also 90 years ago. Adams was the OG landscape photographer, wanting to show the beauty of nature. He literally wrote the book(s) on photography: “The Camera”, “The Negative”, and “The Print”.

Adams went on to be a cofounder of the “f.64 Group”, that was dedicated to the promotion of “pure” photography, defined in their manifesto as “possessing no qualities of technique, composition or idea, derivative of any other art form.”

Which is a bit weird, given darkroom printing is a lot like painting, and his images were heavily manipulated in the darkroom. Dodging, burning, cropping, contrast boosting, sharpening, sometimes even turning day into night.

But that’s alright? Do as I say not as I do?

Raising a flag over the Reichstag, 1945. This is the original unretouched image, that includes the senior sergeant with a watch on each wrist. That’s not the issue here, rather that the entire image was staged by the photographer himself. To the extent that he even supplied the flag.

I don’t know how you can manipulate a photo more than this? It’s almost like photography can be a form of propaganda?

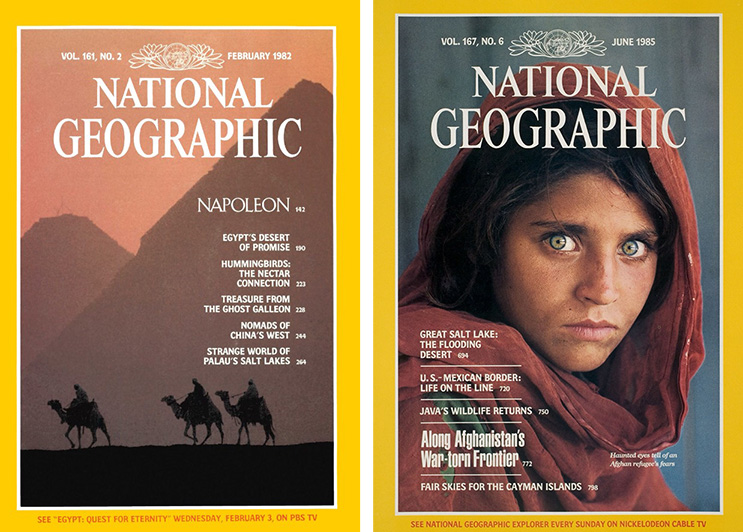

National Geographic, the bastion of photo journalism and reporting. The problem on the left? The pyramids were too far apart to fit a portrait style cover so they were moved closer in post, the photographer explained that the magazine had done this without his permission and he was annoyed about that.

However, it later turned out that the photographer had paid the camel riders to go back and forth until he got the perfect shot. So: manipulation before the photo OK, after not OK? Ehhhh, I’m not so sure they’re that different.

On the right: Steve McCurry’s “Afghan Girl” cover. A photographer who was prolific not just in his field but also in his use of Photoshop: McCurry was discovered to be a serial post producer, often removing or replacing large parts of photos.

He defended this by saying “I’m not a photojournalist”. Which is a bit odd given he spent forty years as a photojournalist.

“I’m Not A Photojournalist”

So McCurry is essentially saying that he’s a fine art photographer, which is a euphemism for “I can do whatever I want”. Which is fine, plenty of photographers make a good living in that space, but I don’t think you can proclaim some sort of revisionist history without acknowledging the potentially decades of manipulated work.

And there are plenty of photographers working in the fine art space who openly admit to manipulating their work either before or after the photo is taken. Take Gregory Crewdson for example:

His photographs are elaborately planned, produced, and lit using large crews familiar with motion picture production who light large scenes using cinema production equipment and techniques. He employs a team of artists and technicians to create the illusion of reality, and uses actors as his subjects.

Every part of every photo is meticulously planned. These are heavily influenced by paintings from Hopper, films by Lynch, and so on.

He will sell out his entire print run for a project before he has even shot the photos.

Andreas Gursky, Rhine II, 1999

This was produced in an edition of six. It is a heavily manipulated photograph, all the background detail was removed. Gursky is transparent about this - it’s part of the appeal of his work.

In 2011, a print of Rhein II was auctioned for $4.3 million (then £2.7m), making it the most expensive photograph ever sold (until 2022).

Murray Fredricks. His latest project involves shooting photos of trees that look like they’re on fire. He runs gas pipes up the back of the tree to give that impression.

A common criticism of Fine Art photography is “I could have shot that”.

Exactly, but you didn’t.

Successful fine art photography is largely about an idea well executed.

How about the artists up in arms about reuse, reworking, remixing, and reappropriation?

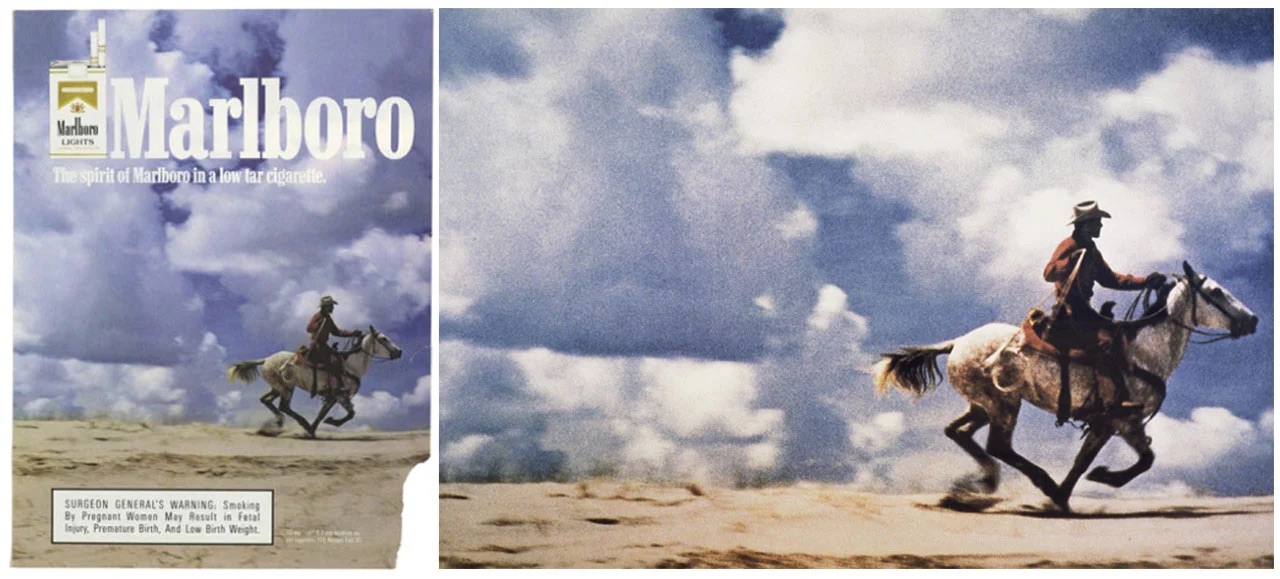

Sam Abell shot a series of photos for a Marlboro ad campaign. Richard Prince shot photos of those photos in a way that cropped out the text and logo. Prince’s picture is a copy (the photograph) of a copy (the advertisement) of a myth (the cowboy).

Prince’s photos sold for millions.

Prince has been sued multiple times by artists and photographers that were upset he had reappropriated their work. He has never lost a case.

Expectation vs Reality

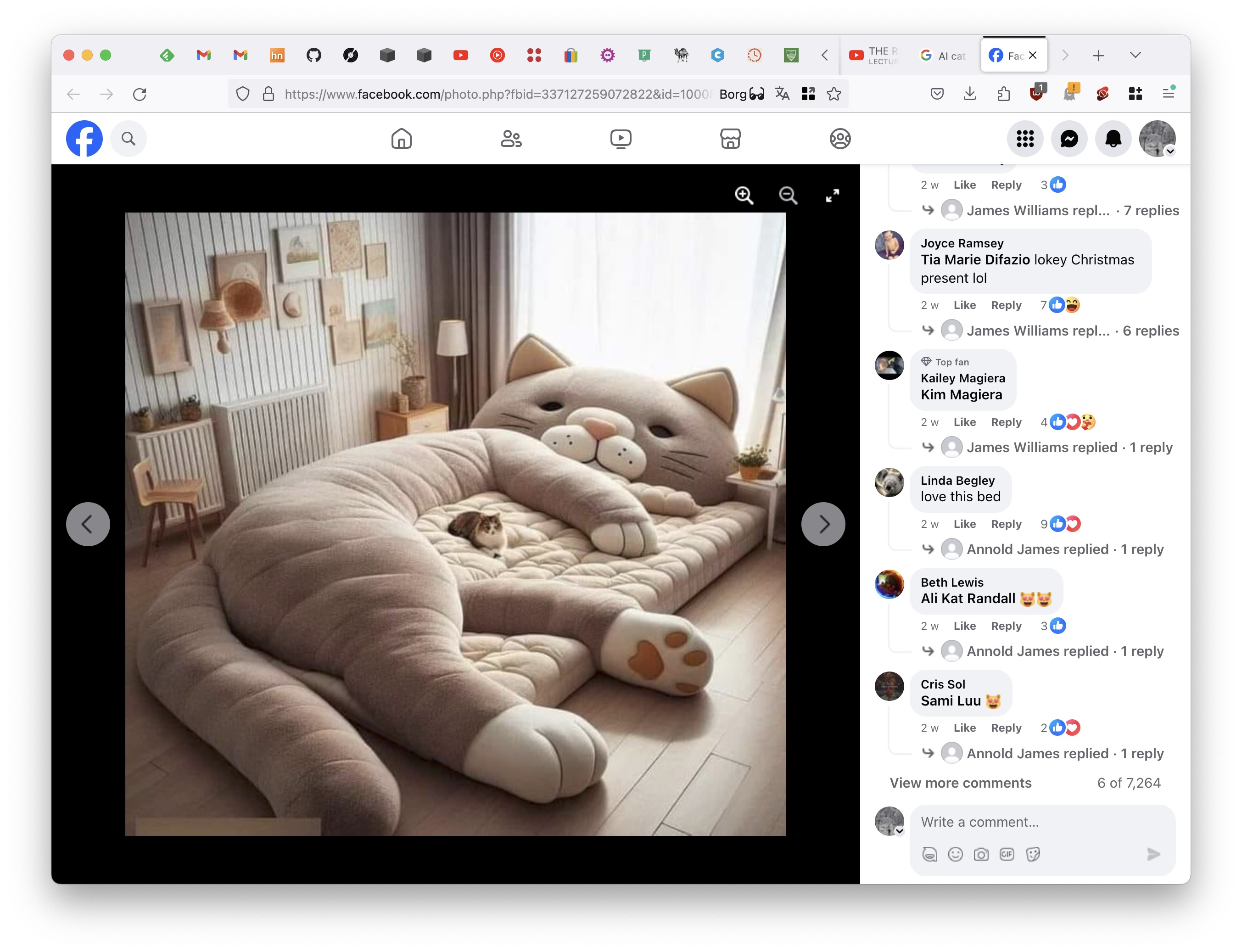

That’s all well and good, but what about actual generative AI when it comes to photography. The creation of an image from nothing but a prompt? How do we reconcile the expectation and the non-reality?

My partner showed me this at the beginning of last year. It’s clearly generative AI without even looking at the detail like the weirdly arranged frames in the background, or the half disappearing lamp. I mean, the sheer impracticality of the thing.

“Today everything exists to end in a photograph” is now “Today nothing needs to exist to end in a photograph”.

7,264 people (at the time of the screenshot) either don’t know or don’t care that this thing doesn’t exist. Probably some combination of the two heavily biased towards the latter.

Because why care about the implication of a non-reality? Why would the platforms care either when there’s that much engagement? Like moths to an enlarger lamp, the ads will keep flowing.

And this kind of thing is rife for use as bait:

Who looks at, what is clearly AI, concept art on the left and thinks the final product is going to come even close to it? OK, it’s a pretty shoddy job, but come on!

The thing is, it’s possible to do this well. IKEA have quietly been doing it for over a decade. Catalogs full of things that don’t exist, that do exist. You get what I’m saying here?

I think we can be pretty sure today 100% of IKEA’s photographs are generated. And stock photography? Commercial production work? Same. I’m sure there is plenty of “photography” we see now that is entirely generated, either by AI or otherwise, that gives us no clues that it was done so.

In fact, I wouldn’t be surprised if more than 50% of the imagery, or “photography”, we see now is generated from nothing, especially online.

The meaning of “photography” is changing.

Perpetual Change

Photography is… “a discipline established on a technological bedrock that shifts every so often … The next hundred years will continue to bring unimaginable change to the medium and to be surprised by this is your own damn fault.” - from The Meaning of Film’s Decline.

- 200 years ago: The first “photographs”: Fixed images

- 150 years ago: a rich person’s play thing: Collodion and Daguerreotypes

- Involving a chemical process that had a good chance of killing you.

- 120 years ago: the Box Brownie

- Photography was there if you wanted it.

- Most people still didn’t want, or could afford it, though.

- 100 years ago: 35mm film

- 80 years ago: Instant film

- 50 years ago: Digital photography (yes, that long ago)

- 25 years ago: Camera phones

- Now: Generative AI

Or, put another way: “You have to be a bit nuts to try this” then “You have to be able to afford it” then “You have to want to do it” then “You have to be there”.

Now? “You have to have an idea”. At least if you want to stand out, because everyone is everywhere all of the time with a camera and taking exceptionally well composed, nicely exposed, sharp, beautiful photos isn’t going to get you anywhere in the art world because that’s no longer interesting.

In fact it’s utterly mundane, calling yourself an “influencer” doesn’t change this.

But there’s still one question we have to ask ourselves: “What are the gaps?”

What Are The Gaps?

And that’s what the previous 4,500 rambling words lead me to here.

Café Royal Books is a small independent photozine publisher that releases weekly titles, focussing on post-war documentary photography linked to Britain and Ireland. Occasionally photography from outside the UK.

This is the sixth archive box set. They produce these every couple of years, collecting that last 100 books they have published. Each book contains fifteen to twenty unseen photographs.

The photos often are often technically imperfect. But every book contains interesting and important work.

Filling in the gaps.

This is Martin Parr interviewing Craig Atkinson, who runs Café Royal books. All the books in the background here as well are filling in the gaps. Full of photography beyond the technicalities like “Is it sharp”, “Is it in focus”, “Is it properly exposed”, “Is the horizon straight”, “Are the colours correct”.

None of these are really that important once you get to a certain level of understanding of photography beyond the technicalities. Because important and arguably interesting photography is predicated on filling in the gaps. We are awash with technically competent boring photography. Mediocrity.

Generative AI for most photography is either technically incompetent or not interesting. It’s often used as filler - all those hero images now occupying the headers of blog posts. It’s not quite got to the level of mediocrity.

Maybe it will soon, and maybe it will get beyond that. But it’s unlikely it will ever be able to fill in the gaps.

And you need to continue to ask this question. Not just when thinking about photography. But anywhere you might be using generative AI, LLMs, agents, or any other AI technology.

“What are the gaps?”

This is the real cactus car, I shot this in Santorini in September 2023.

-

That is to say, not yet realised in print form. ↩